Research

Overview

- Easy evaluation and comparison of brightness perception models

- Experimental characterization of lightness constancy

- Evaluation of experimental techniques to measure appearance

- An early vision model of lightness perception

- Increments and decrements in naturalistic stimuli

- Depth perception in 3d scenes

- Manual dexterity in humans and robots

Easy evaluation and comparison of brightness perception models

There exist various models of human brightness perception that can account for human brightness perception on specific sets of input stimuli (e.g. specific brightness illusions). However, there is no structured overview of how each of these models performs on a larger test battery that contains input stimuli and tasks that the model was not specifically designed for. This makes both the qualitative as well as the quantitative comparison of existing brightness perception models difficult. Our goal is to develop an open-source framework which enables easy evaluation and comparison of different brightness perception models on different input stimuli. For this, we will develop a user-friendly pipeline where users can select multiple brightness perception models and input stimuli and receive an easily interpretable output about the model performance.

Experimental characterization of lightness constancy

The perceptual domain in which we currently study the above question is lightness. Human observers perceive the lightness of surfaces relatively stable despite tremendous fluctuation in the sensory signal due to changes in viewing conditions. The luminance that is reflected to the eye from one and the same surface might vary substantially depending on whether the surface is seen under direct illumination or might be obscured by a shadow.

Evaluation of experimental techniques to measure appearance

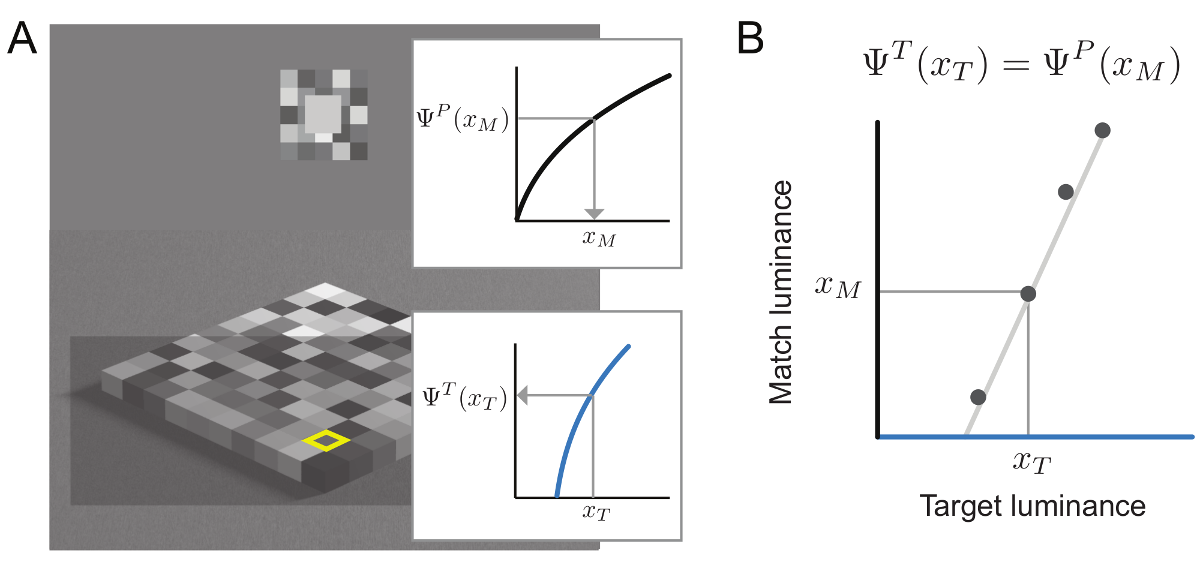

The appearance of visual stimuli is commonly measured with matching procedures. In such an experiment the observer is asked to adjust an external reference stimulus until it looks the same as the target, and usually the target is presented in a different context or viewing condition, e.g. a different illumination. Although prevalent in the literature, matching tasks are usually difficult for the observer, do not guarantee subjective equality (as observers could be doing a 'minimal' difference match), and provide data which are an indirect measure of the underlying perceptual dimension. As an alternative we have explored the use of scaling methods for the measurement of appearance of visual stimuli presented across different viewing contexts. Specifically, we have evaluated Maximum Likelihood Difference Scaling (MLDS) and Maximum Likelihood Conjoint Measurement (MLCM). Here our recent poster about this topic.

Matching procedures and underlying perceptual processes. After Wiebel et al. (2017).

An early vision model of lightness perception

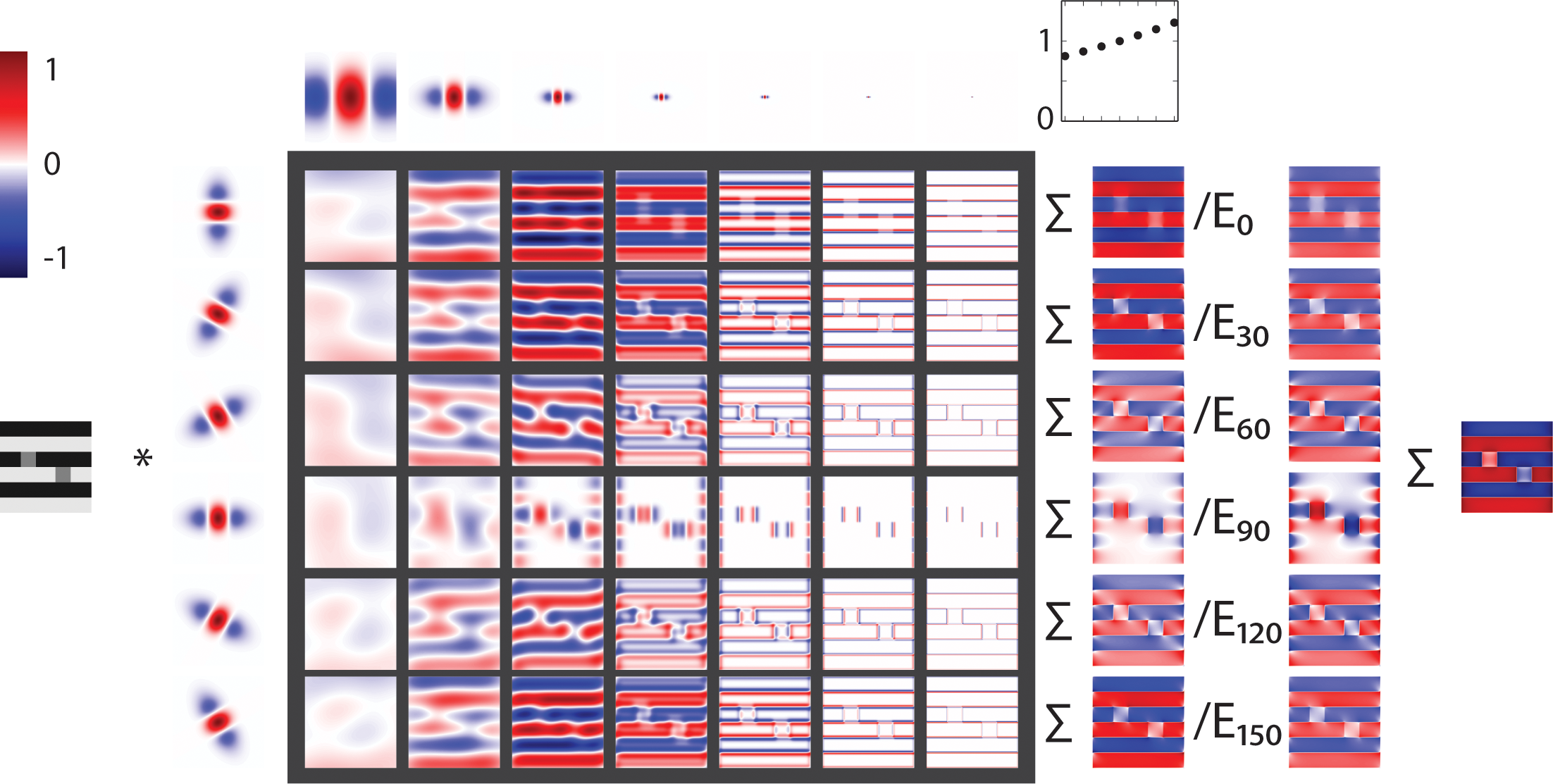

Our goal is to develop a mechanistic account of the computational principles and mechanisms that transform retinal sensations into meaningful percepts. We address this goal in the domain of lightness perception and want to understand how surface lightness is determined from the luminance signal in the retina. A computational model of lightness should receive a 2d matrix of gray values as input and compute the perceived lightness at all image positions as output.

Response of ODOG model to White's illusion stimulus from Betz et al. (2015).

Increments and decrements in naturalistic stimuli

In this project we study the question under which conditions increments and decrements are matched with targets of opposite polarity.

Depth perception in 3d scenes

The perception of surface lightness is influenced by the depth arrangement of a scene. To study depth perception under naturalistic conditions we collaborate with the Computer Graphics lab. We use objects that are created by means of 3d printing on the basis of mesh models. The availability of these models means that for each 3d object a corresponding image of the object can be rendered on the computer. We test perceptual responses to 3d objects and their 2d counterparts.

Manual dexterity in humans and robots

Human grasping skills are far superior to those of robots. In collaboration with the Robotics lab we investigate the principles that lead to this superior performance in human grasping and work towards transferring these principles to robotic systems. Our key hypothesis is that human grasping performance crucially depens on the purposeful exploitation of contact with the environment.